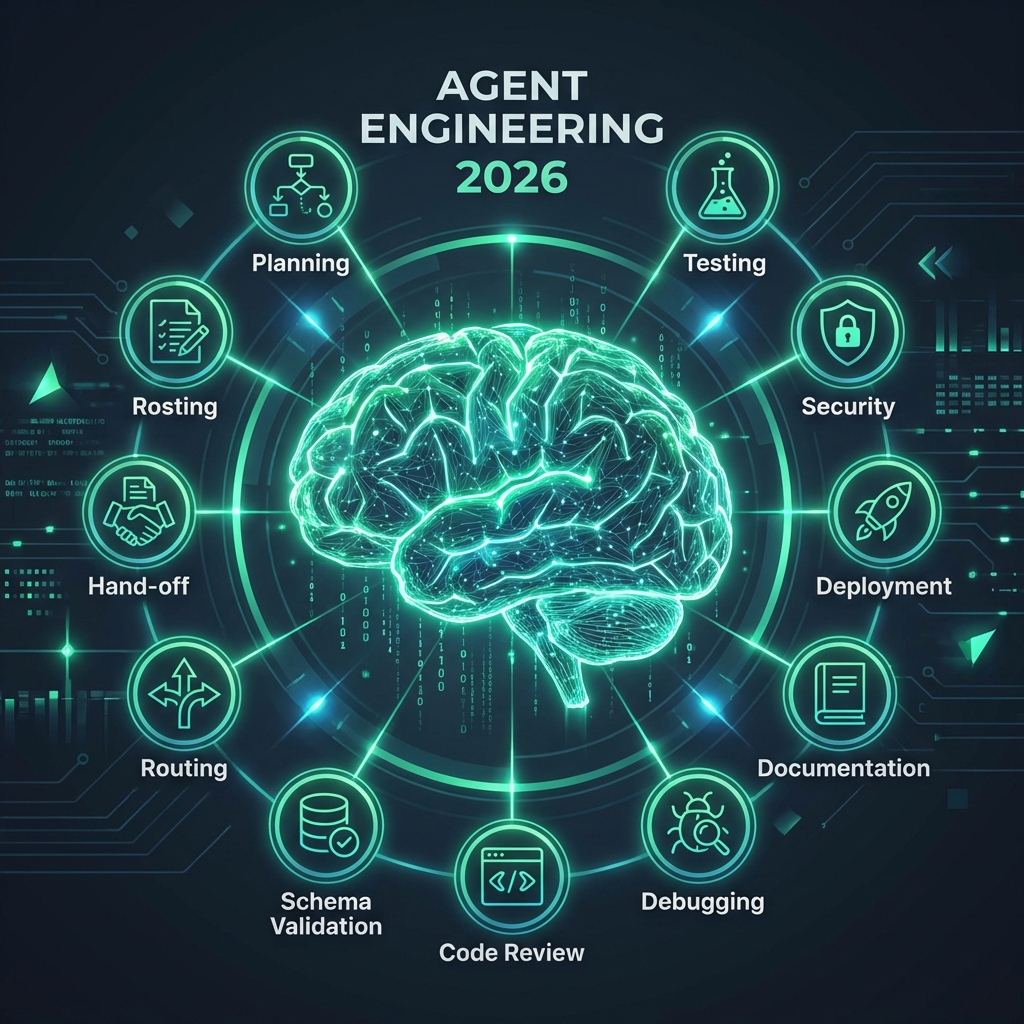

The 10 Agent Skills You Need in 2026: A Guide to Agent Engineering

Stop writing prompts. Start engineering skills. A deep dive into the 10 standardized capabilities your AI workforce needs to move beyond "works on my machine."

In 2025, the industry was obsessed with "Prompt Engineering"—the art of whispering the right words to a model to get a decent result. We spent hours refining system prompts, begging models to "act like a senior engineer", and hoping for the best.

By 2026, that era is effectively over. The myth of the "Generalist Agent"—the AI that can just "figure it out" if you ask nicely—has stalled at the prototype phase.

Enter Agent Engineering.

This new discipline isn't about teaching an LLM to "think". It's about equipping strictly scoped runtimes with deterministic Skill Packs—standardized, versioned, and testable assets that bridge the gap between human intent and machine execution.

If you are building an AI workforce today, you don't need better prompts. You need to install these 10 essential Agent Skills.

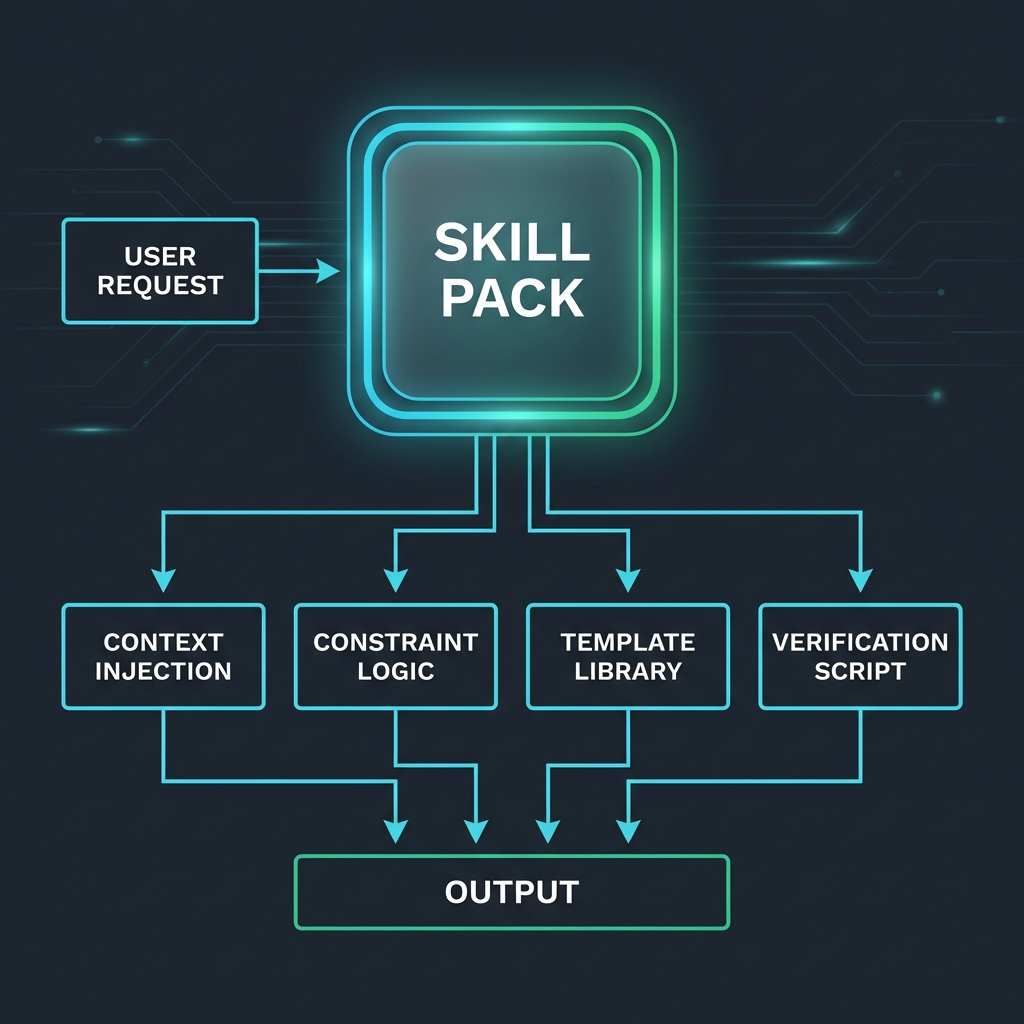

The Shift: From Prompts to "Skill Packs"

Before we dive into the list, we must strictly define what a "Skill" is in the modern context.

- Old Way (2024-2025): A "Skill" was a paragraph in a system prompt. "You are an expert in SQL. Write good queries."

- New Way (2026): A "Skill" is a software package. It contains:

- Context Injection: The exact schema of your database (minus the data).

- Constraint Logic: "Never run

DROP TABLEwithoutCASCADE." - Template Library: Pre-validated patterns for common tasks.

- Verification Script: A secure sandbox to test the output before showing the human.

The Agent Runtime Architecture

Here is how a Request flows through a modern Agent Runtime. Note that the "Skill Pack" acts as the central governor, injecting context and enforcing rules before any output is returned.

The 10 Essential Agent Skills (Deep Dive)

We have analyzed data from millions of AI coding sessions across platforms like Cursor, Windsurf, and our own Context Ark. We identified the 10 non-negotiable skills for a production-ready agent.

1. Context Locking (and /llm.txt)

The Ability to Ignore.

Most agents fail because they know too much, not too little. An agent with access to your entire codebase (RAG) will find "semantic similarities" between your User Auth module and your Billing module, leading to hallucinated dependencies.

- The Skill: Configuring the Context Engine to "lock" specific files and explicitly exclude everything else.

- Implementation:

- Level 1: A

.cursorrulesfile that listsignoring_files. - Level 2: A standardized

/llm.txtfile that provides a concise, high-level map of the repo for external agents. - Level 3 (Pro): A

project_kernel.jsonthat defines the exact "Blast Radius" of a task, physically preventing the agent from reading outside the scope.

- Level 1: A

2. Kernel Adherence

The Single Source of Truth.

Your project likely has a "Kernel"—a definition of its DNA (Stack, Rules, Users). The most critical skill is the ability to check every single decision against this Kernel before writing code.

Anti-Pattern:

User: "Add a blog." Agent: "Sure, I'll install WordPress." (Violates Kernel: Stack is Next.js)

Correct Pattern (Skill Enabled):

Agent: "Checking

project_kernel.json... Stack is Next.js + MDX. Rejection: WordPress is not allowed. Proposal: Createcontent/blogusing MDX."

3. Deterministic Routing

Knowing When NOT to Generate.

LLMs are probabilistic. Code is deterministic. A skilled agent knows when to stop "generating" logic and instead "route" to a deterministic tool.

- The Skill: Recognising "Calculation Intent" or "Lookup Intent".

- Example: Never ask an LLM to calculate a date offset (e.g., "What is 30 days from today?"). It might say "February 30th". Use a

date_calculatortool.

4. Schema Enforcement

No More JSON Hallucinations.

Agents love to invent new JSON fields. They will see user.id and hallucinate user.uuid. A "Schema Enforcement" skill wraps every output in a strict Zod or Pydantic validator tailored to your project's specific API types.

- Benefit: Zero runtime types errors in generated frontend code.

- Implementation: Injecting your Swagger/OpenAPI spec directly into the system prompt as a "Types" block, and asking the agent to

<validate>its output against it.

5. Dependency Awareness (DAG Analysis)

Understanding "Before and After."

You cannot implement a UI component before the API endpoint exists. A skilled agent builds a Directed Acyclic Graph (DAG) of the implementation plan and refuses to write Step B until Step A passes tests.

- The Check: "Does

api/usersexist? No. Halted: Cannot buildUserList.tsx."

6. Secret Hygiene

The "Redaction" Reflex.

This should be hard-coded. An agent must have a pre-processor skill that scans all input for entropy (high-randomness strings like API keys) and redacts them before they even hit the context window.

- 2026 Standard: If an agent sees a

.envfile, it should treat it as radioactive. It should never output the content of a.envfile, even if explicitly asked.

7. Test-Driven Generation (TDG)

Tests First, Code Second.

The "Vibe Coding" movement of 2025 was fun, but it shipped bugs. The mature skill is TDG:

- Write the failing test (e.g.,

user.test.ts). - Verify it fails (red).

- Write the implementation.

- Verify it passes (green).

The Constraint: The agent is not allowed to show you the code until the test passes.

8. Self-Correction

Detecting the "Doom Loop".

We've all seen an agent try to fix a bug, fail, and try the exact same fix again. A Self-Correction skill includes a "History Hash" monitor to detect repetitive loops and force a strategy shift.

- Trigger: "I have attempted this fix 3 times. Standard deviation of solution is 0. Aborting. Requesting human help."

9. Doc-Code Parity

Documentation as Code.

Documentation is not an afterthought; it is a compiled artifact. This skill forces the agent to update the /docs directory in the same commit as the code change.

- The Rule: CI/CD fails if code changes but docs do not. The agent must run

npm run docs:generatebefore committing.

10. Human Hand-off (The Bridge)

Knowing Its Limits.

The ultimate skill is humility. A robust agent calculates a "Confidence Score" for every plan. If the score drops below a threshold (e.g., 70%), it stops and triggers a notify_user event.

Tutorial: How to Build These Skills (Hands-On)

You could spend months writing system prompts and fine-tuning models to teach your agents these skills. Or you could use the Skill Generator to create them instantly.

Step 1: Launch the Skill Generator

Navigate to the Free Skill Generator Tool. You don't need an account to start.

Step 2: Define your Context

The generator will ask for your "Brain Dump". This is where you inject your Project Kernel.

- Input: "I am building a Next.js SaaS for pet grooming. We use Supabase and Tailwind."

- Result: The generator parses this into a structured JSON Kernel.

Step 3: Select "Project Dynamic" Tier

Choose the Project Tier. This ensures the skills are not generic ("Write React") but specific ("Write React using my Button component").

Step 4: Export to Your Runtime

Select your preferred agent:

- Cursor: Generates a

.cursorrulesfile. - Claude: Generates a long-context system prompt.

- Windsurf: Generates a

.windsurfrulesfile.

Step 5: Install

Download the ZIP. Unpack it into your repo root. Now, when you ask Cursor "Create a new page", it will:

- Read your Kernel.

- Check for existing components (Context Locking).

- Validate against your Design System (Schema Enforcement).

Summary

The difference between a "Toy Agent" and a "Workforce" is Reliability. Reliability does not come from Magic. It comes from Engineering.

By treating Agent Skills as distinct, measurable, and enforceable software assets, we move from the unpredictable world of "chatting with bots" to the engineered world of Context Ark.

Context Ark Team

Writing about AI, documentation, and developer tools

Turn Brain Dumps into PRDs

Don't let AI guess your requirements. Generate a structured PRD with acceptance criteria instantly.